Why suddenly everything is different: Digitalisation is leading to a phase of rapid innovation and market upheaval. In our series of essays, Philipp Bouteiller looks at the trends and technologies behind it and sheds light on what they mean for people. The first act is all about the origins of digitalisation and the evolution of microchips.

At the beginning of the last century, “computers” were still employees with pronounced numerical skills who worked mainly in the accounting departments of large companies, where they added up long series of numbers, for example (to compute = to calculate). Then there were the first calculating machines. In 1941, Konrad Zuse invented the Z3 in Berlin, the first “freely programmable calculator in binary switching technology and floating point calculation”, almost at the same time the first modern transistors were developed – and the rest is history.

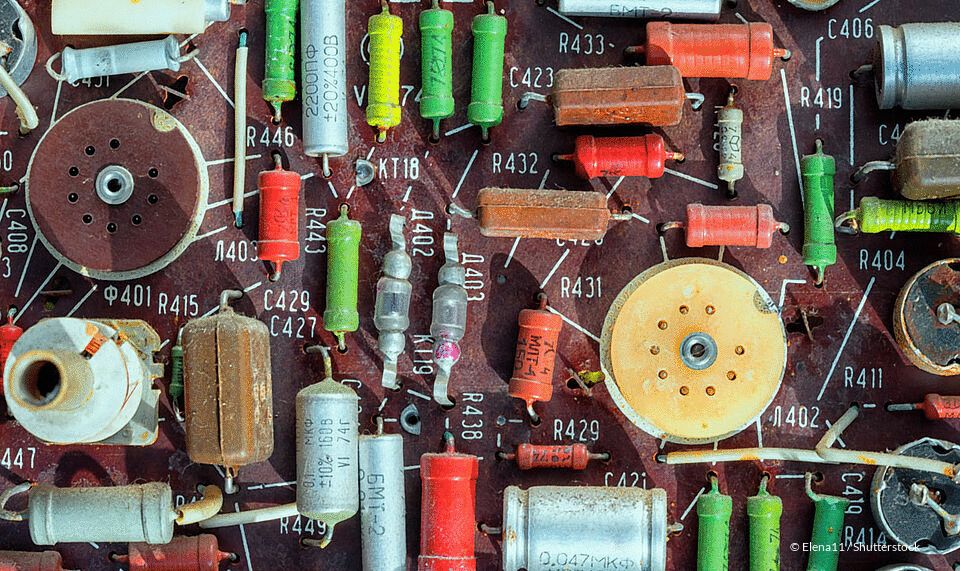

“Moore’s Law”, named after Gordon Moore, one of the co-founders of Intel, states that the computing power of computer chips (more precisely: the number or density of transistors per area on an integrated circuit) doubles approximately every two years. The iPhone X now has over 4000 times the computing power of the legendary high-performance computer “Cray-1“, which was sold to weather services and research institutions in the late 1970s for about $9 million (in today’s purchasing power, that would be an incredible $39 million!). So every smartphone is already a powerful supercomputer. Although the miniaturisation of circuits has now reached physical limits, the industry has essentially kept to the increase curve in processor performance predicted at the time for more than 60 years. Now, not only are several computer cores combined on a small chip in order to be able to further increase performance on a small surface, but the combined chips are even stacked three-dimensionally on top of each other.

For example, we have now acquired massive computing capacities in the smallest of spaces and formerly complex and expensive processes have become so affordable that the use of computers is worthwhile even in those areas where it was previously too expensive and complex. We are still a long way from the end of the development. After Google was able to achieve its first successes with quantum technology back in 2015, other companies such as IBM and Intel have now built their own quantum computers.

Some say it’s just getting started now. The consequence? Everything that can be digitised will be digitised – regardless of whether we think it makes sense or not.

The 2nd act of the essay series will be published in 2 weeks.